Learning, adaptation, and adaptive behaviors

Return to Home or Overview of Professional Activities.

Return to Research Projects list.

Last updated on 29 February 2012.

What is this about?

We are interested in understanding the How? and the What? of mechanisms and functions responsible for estimation, adapation, and learning on multiple timescales and in various systems. We believe that the problem of learning from examples is a universal problem faced by biological systems on all scales of organization. The problems, which live on the intersection of physics and biology, are universal, while solutions may be organism– specific. Focusing on physics-style mathematical models of biological processes allows us to uncover phenomena that generalize across different living organisms – something that traditional empirical approaches cannot do alone. Correspondingly, we study learning and adaptation on cellular, neural, and behavioral scales, and have recently started to bring evolutionary adaptation into the same framework. In addition, we also work on fundamentals of learning theory.

Specific questions include:

- How should information processing strategies change when properties of the environment surrounding the organism change?

- Do they change as predicted in real living systems? Why yes or no? What are physiological mechanisms underlying the changes?

- Do animals exhibit behavior that is a manifestation of near-optimal learning?

To answer some of these questions, we need to understand first how to quantify animal behaviors, and we spend a lot of effort on such questions as well.

Some of the questions we answer here are very much related to our other projects, such as understanding how we make visual inferences, how populations adapt in evolutionary contexts, and how the quality of signal processing can be quantified. Our recent review (Nemenman, 2011) illuminates some of these connections.

Results

- We have explained how learning and prediction can be quantified using information-theoretic ideas. This led to definition of general "complexity" classes, distinguished by how easy it is to learn the underlying dynamics that produced them (Bialek et al., 2001a, Bialek et al., 2001b). This further allowed us to propose an experimental framework for distinguishing different types of learning performed by animals, see Nemenman, 2005.

- We have contributed to creation of learning-theoretic algorithms for inference of continuous distributions, Nemenman and Bialek, 2002, and entropies.

- Illustrating relations of these questions to studies of biological information processing, we have shown in (Nemenman et al., 2007) how neural code in the fly H1 neuron adapts to the changing external signal on very fast time scales.

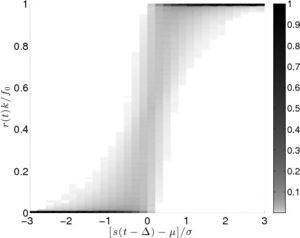

- We have argued that certain molecular signaling systems have built-in mechanisms for performing an adaptive response known as gain control, Nemenman, 2012. The figure to the right shows the distribution of responses conditional on the stimuli in such molecular circuits. The distribution is insensitive to changing the standard deviation of the signal distribution.

Future work

We are continuing our enumeration of simple adaptive mechanisms in canonical neural and molecular circuits. In addition, much of the work along these directions in the future will center around studying learning and adaptation in empirical systems. We have collaborations to do this in

We are always looking for more collaborations. To understand such adaptive behaviors, it is important to quantify them. To this extent, we are working in the field that we termed Physics of Behavior. Finally, we are also continuing to make connections between learning theory and evolutionary processes.